AWS resource mapping

Many production Kubernetes workloads depend on cloud resources, such as S3 buckets, RDS databases, and Lambda functions. In this tutorial, you will learn how Otterize can enhance your visibility into the AWS resources that are accessed by your workloads.

In this tutorial, you will:

- Set up an EKS cluster.

- Deploy two Lambda functions.

- Deploy a server pod that retrieves a joke (as a string) from one Lambda function, reviews it, and posts the review to another Lambda function.

- Automatically detect and monitor the Lambda function calls using Otterize.

By the end of this tutorial, you will understand how to map Kubernetes workloads and their associated AWS resources using Otterize.

Prerequisites

CLI tools

You will need the following CLI tools to work with this environment:

- AWS CLI - Ensure you have credentials within the target account that grant permissions to work with EKS clusters, IAM, CloudFormation, and Lambda functions.

- eksctl - This tool simplifies the creation and management of an EKS cluster.

Create an EKS cluster

Already have EKS cluster? Skip to the next step.

Start by creating an EKS cluster for deploying your pods. Use the eksctl tool with the following YAML configuration:

curl https://docs.otterize.com/code-examples/aws-visibility/eks-cluster.yaml | eksctl create cluster -f -

Inspect eks-cluster.yaml contents

apiVersion: eksctl.io/v1alpha5

kind: ClusterConfig

metadata:

name: otterize-tutorial-aws-visibility

region: us-west-2

iam:

withOIDC: true

vpc:

clusterEndpoints:

publicAccess: true

privateAccess: true

addons:

- name: vpc-cni

version: 1.14.0

attachPolicyARNs:

- arn:aws:iam::aws:policy/AmazonEKS_CNI_Policy

configurationValues: |-

enableNetworkPolicy: "true"

- name: coredns

- name: kube-proxy

managedNodeGroups:

- name: x86-al2-on-demand

amiFamily: AmazonLinux2

instanceTypes: [ "t3.large" ]

minSize: 0

desiredCapacity: 2

maxSize: 6

privateNetworking: true

disableIMDSv1: true

volumeSize: 100

volumeType: gp3

volumeEncrypted: true

tags:

team: "eks"

Next, update your kubeconfig to connect with the new cluster.

aws eks update-kubeconfig --name otterize-tutorial-aws-visibility --region 'us-west-2'

Enable AWS Visibility with eBPF

You will first need to install Otterize in your cluster. If your cluster is not already connected, you can do so by following the Kubernetes setup instructions detailed on the Integrations page.

When installing Otterize, append the following flag to the Helm command to enable the eBPF agent, which is responsible for inspecting SSL and non-SSL traffic and generates the visibility into AWS traffic:

helm upgrade ... --set networkMapper.nodeagent.enable=true

Tutorial

Now that your environment is set up, you are ready to deploy the application components, including the AWS resources that Otterize will monitor.

Deploy two Lambda functions

First, you will deploy two Lambda functions: DadJokeLambdaFunction and FeedbackLambdaFunction. These functions will interact with your server pod to generate a dad joke training dataset. The server pod receives a joke from the DadJokeLambdaFunction, reviews the joke, and sends the feedback to the FeedbackLambdaFunction.

To deploy the Lambda functions and their required roles, use the following command:

curl https://docs.otterize.com/code-examples/aws-visibility/cloudformation.yaml -o template.yaml && \

aws cloudformation deploy --template-file template.yaml --stack-name OtterizeTutorialJokeTrainingStack --capabilities CAPABILITY_IAM --region 'us-west-2'

Inspect CloudFormation YAML

AWSTemplateFormatVersion: '2010-09-09'

Description: >-

Stack creates two Lambda functions for dad jokes and feedback, plus the user and related policies

Resources:

DadJokeLambdaExecutionRole:

Type: 'AWS::IAM::Role'

Properties:

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Principal:

Service: lambda.amazonaws.com

Action: 'sts:AssumeRole'

Policies:

- PolicyName: 'DadJokeLambdaExecutionPolicy'

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- 'logs:CreateLogGroup'

- 'logs:CreateLogStream'

- 'logs:PutLogEvents'

Resource: 'arn:aws:logs:*:*:*'

- Effect: Allow

Action:

- 'execute-api:Invoke'

Resource: '*'

DadJokeLambda:

Type: 'AWS::Lambda::Function'

Properties:

Handler: 'index.handler'

Role: !GetAtt DadJokeLambdaExecutionRole.Arn

Runtime: 'nodejs20.x'

Code:

ZipFile: |

const https = require('https');

exports.handler = async (event) => {

return new Promise((resolve, reject) => {

const options = {

hostname: 'icanhazdadjoke.com',

method: 'GET',

headers: { 'Accept': 'application/json' }

};

const req = https.request(options, (res) => {

let data = '';

res.on('data', (chunk) => {

data += chunk;

});

res.on('end', () => {

resolve({

statusCode: 200,

body: data,

headers: { 'Content-Type': 'application/json' },

});

});

});

req.on('error', (e) => {

reject({

statusCode: 500,

body: 'Error fetching dad joke',

});

});

req.end();

});

};

Timeout: 10

FeedbackLambda:

Type: 'AWS::Lambda::Function'

Properties:

Handler: 'index.handler'

Role: !GetAtt DadJokeLambdaExecutionRole.Arn

Runtime: 'nodejs20.x'

Code:

ZipFile: |

exports.handler = async (event) => {

const payload = JSON.parse(event.body);

const joke = payload.joke;

const isFunny = payload.funny;

console.log("Joke:", joke);

console.log("Is it funny?", isFunny);

return {

statusCode: 200,

body: JSON.stringify({ message: "Feedback received, Adding To Training Set" }),

};

};

Timeout: 10

LambdaInvokeUser:

Type: 'AWS::IAM::User'

LambdaInvokePolicy:

Type: 'AWS::IAM::Policy'

Properties:

PolicyName: 'LambdaInvokePolicy'

Users:

- !Ref LambdaInvokeUser

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action: 'lambda:InvokeFunction'

Resource:

- !GetAtt DadJokeLambda.Arn

- !GetAtt FeedbackLambda.Arn

Outputs:

DadJokeLambdaFunction:

Description: "Dad Joke Lambda Function ARN"

Value: !GetAtt DadJokeLambda.Arn

FeedbackLambdaFunction:

Description: "Feedback Lambda Function ARN"

Value: !GetAtt FeedbackLambda.Arn

LambdaInvokeUserAccessKeyId:

Description: "IAM User Name for accessing Joke Lambda"

Value: !Ref LambdaInvokeUser

Deploy server with access to Lambda functions

With the Lambda functions deployed, you're now ready to deploy your server pod and configure it to interact with the two Lambda functions. Follow these steps to set up your environment:

- Create a namespace:

kubectl create namespace otterize-tutorial-aws-visibility

- Retrieve Lambda ARNs:

DAD_JOKE_LAMBDA_ARN=$(aws cloudformation describe-stacks --region 'us-west-2' --stack-name OtterizeTutorialJokeTrainingStack --query "Stacks[0].Outputs[?OutputKey=='DadJokeLambdaFunction'].OutputValue" --output text)

FEEDBACK_LAMBDA_ARN=$(aws cloudformation describe-stacks --region 'us-west-2' --stack-name OtterizeTutorialJokeTrainingStack --query "Stacks[0].Outputs[?OutputKey=='FeedbackLambdaFunction'].OutputValue" --output text)

- Create a

ConfigMapfor Lambda ARNs:

kubectl create configmap lambda-arns \

--from-literal=dadJokeLambdaArn=$DAD_JOKE_LAMBDA_ARN \

--from-literal=feedbackLambdaArn=$FEEDBACK_LAMBDA_ARN \

-n otterize-tutorial-aws-visibility

- Generate AWS credentials and create a

Secret:

USER_NAME=$(aws cloudformation describe-stacks --region 'us-west-2' --stack-name OtterizeTutorialJokeTrainingStack --query "Stacks[0].Outputs[?OutputKey=='LambdaInvokeUserAccessKeyId'].OutputValue" --output text)

aws iam create-access-key --user-name "$USER_NAME" | \

jq -r '"--from-literal=AWS_ACCESS_KEY_ID="+.AccessKey.AccessKeyId+" --from-literal=AWS_SECRET_ACCESS_KEY="+.AccessKey.SecretAccessKey' | \

xargs kubectl create secret generic aws-credentials -n otterize-tutorial-aws-visibility

- Deploy the server pod:

kubectl apply -n otterize-tutorial-aws-visibility -f https://docs.otterize.com/code-examples/aws-visibility/all.yaml

Inspect deployment YAML

apiVersion: apps/v1

kind: Deployment

metadata:

name: joketrainer

namespace: otterize-tutorial-aws-visibility

spec:

replicas: 1

selector:

matchLabels:

app: joketrainer

template:

metadata:

labels:

app: joketrainer

spec:

containers:

- name: joketrainer

image: otterize/aws-visibility-tutorial:latest

env:

- name: AWS_ACCESS_KEY_ID

valueFrom:

secretKeyRef:

name: aws-credentials

key: AWS_ACCESS_KEY_ID

- name: AWS_SECRET_ACCESS_KEY

valueFrom:

secretKeyRef:

name: aws-credentials

key: AWS_SECRET_ACCESS_KEY

- name: DAD_JOKE_LAMBDA_ARN

valueFrom:

configMapKeyRef:

name: lambda-arns

key: dadJokeLambdaArn

- name: FEEDBACK_LAMBDA_ARN

valueFrom:

configMapKeyRef:

name: lambda-arns

key: feedbackLambdaArn

Once the pod is deployed, you can verify the logs to confirm it is successfully calling the Lambda functions:

kubectl logs -f -n otterize-tutorial-aws-visibility deploy/joketrainer

Example output:

Joke: People saying 'boo! to their friends has risen by 85% in the last year.... That's a frightening statistic.

Sending Feedback of Funny?: Yes

Joke: Have you ever heard of a music group called Cellophane? They mostly wrap.

Sending Feedback of Funny?: Yes

Joke: What did Yoda say when he saw himself in 4K? "HDMI"

Sending Feedback of Funny?: No

Visualize Relationships

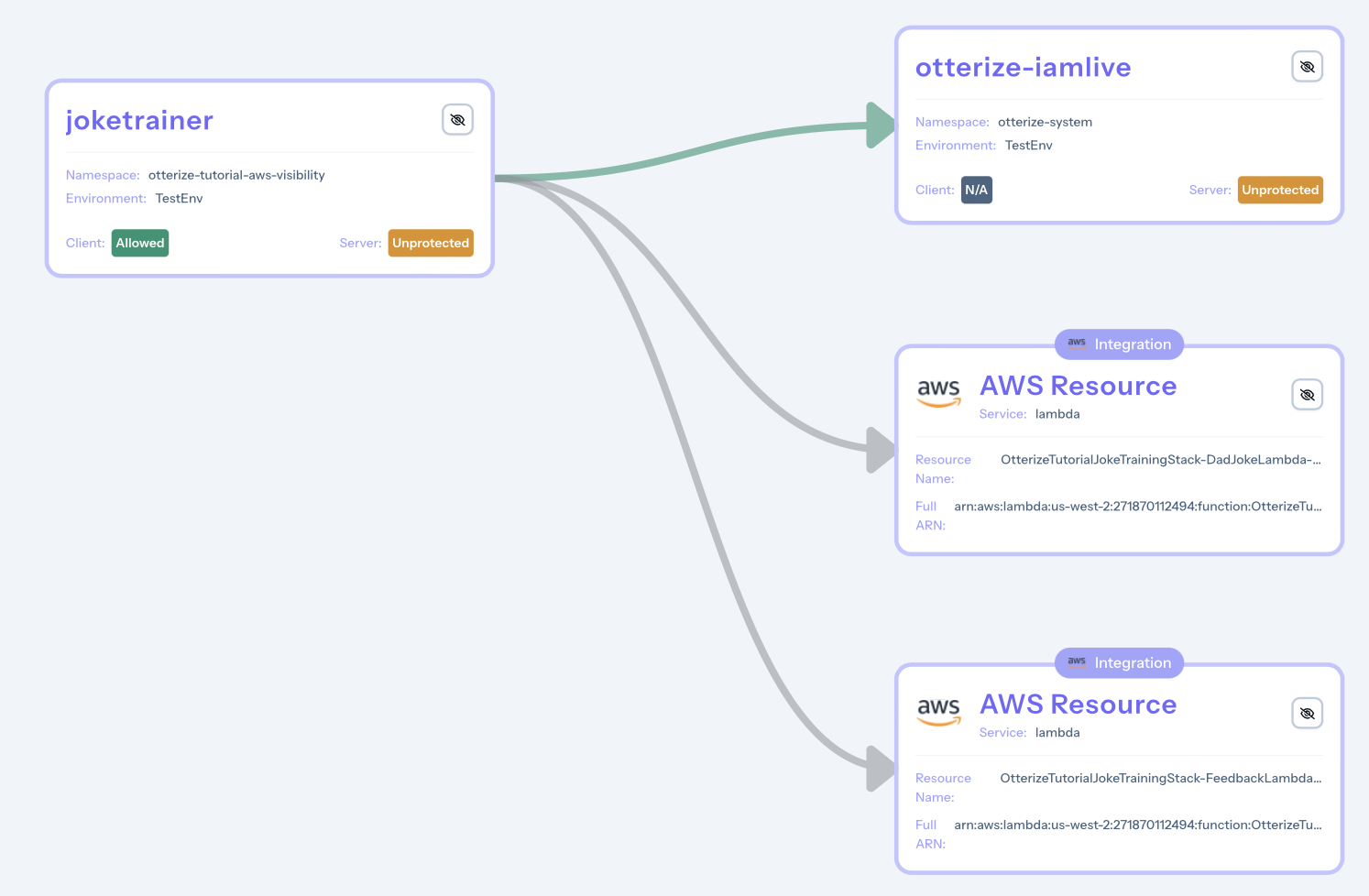

With Otterize monitoring the AWS API calls, access Otterize Cloud to observe both Lambdas in action. The Access graph will display two AWS resources associated with the joketrainer pod: DadJokeLambdaFunction and FeedbackLambdaFunction.

What's Next

Now that you have learned how to enable visibility for AWS resources in your Kubernetes cluster, you are ready to explore how to manage access to these resources. Continue your learning journey with the next tutorial: Automate AWS IAM for EKS.

Cleanup

To remove the deployed example:

kubectl delete namespace otterize-tutorial-aws-visibility

To remove the Lambda functions:

aws cloudformation delete-stack --stack-name OtterizeTutorialJokeTrainingStack --region 'us-west-2'

To remove the EKS cluster:

eksctl delete cluster --name otterize-tutorial-aws-visibility --region us-west-2