Egress NetworkPolicy Automation

Let’s learn how Otterize automates egress access control with network policies.

In this tutorial, we will:

- Deploy an example cluster consisting of an example frontend for a personal advice application and a server with an external dependency to retrieve wisdom.

- Declare ClientIntents for each workload, including public internet and internal network egress intents.

- See that a network policy was autogenerated to allow just that and block the (undeclared) calls from the other client.

- Revise our intent to use DNS records to tie our network policies to domain names.

Prerequisites

Install Otterize on your cluster

To deploy Otterize, head over to Otterize Cloud, and to integrate your cluster, navigate to the Integrations page and follow the instructions. Make sure that you toggle Enforcement to On when configuring the integration on Otterize Cloud.

Note: Egress policy creation is off by default. We must add the following flag when installing Otterize to enable egress policy creation.

--set intentsOperator.operator.enableEgressNetworkPolicyCreation=true

Tutorial

Deploy the cluster

This will set up the namespace we will use for our tutorial and deploy the cluster containing our front and backend pods. Upon deployment, our cluster will have no network policies in place.

kubectl create namespace otterize-tutorial-egress-access

kubectl apply -n otterize-tutorial-egress-access -f https://docs.otterize.com/code-examples/egress-access-control/all.yaml

About Network Policies

By default, in Kubernetes, pods are non-isolated. Meaning they accept traffic from any source and can send traffic to any source. When you introduce policies, either ingress or egress pods become isolated. Any connection not explicitly allowed will be rejected. When an ingress policy type is introduced, any traffic that does not match a rule will be rejected. Similarly, when an egress policy type is introduced, any traffic that does not match a rule will not be allowed out of the pod.

Stringent policies can be essential in certain sectors, such as healthcare, finance, and government. Implementing egress policies is crucial in minimizing attack surfaces by concealing workloads or restricting the exposure of any compromised workloads. However, challenges may emerge when egress policies are applied to workloads dependent on external communications that were not initially accounted for. These external communications could include DNS, time synchronization, package repositories, logging, telemetry, cloud services, authentication, or other critical external dependencies that, while not directly related to a pod's primary functionality, are vital for its operation.

Otterize helps elevate these issues by capturing and mapping the ingress and egress connections used by your pods and then providing suggested policies to maintain your witnessed traffic. You can also enable shadow enforcement to see which connections would be blocked without committing to active enforcement.

Defining our intents

We aim to secure the network in our example cluster by introducing a default deny policy for our entire network and policies for each pod’s appropriate ingress and egress needs.

- Frontend - Needs to retrieve advice from our backend. This will result in an egress policy on our frontend and an ingress policy on our backend.

- Backend - Needs to be able to accept our frontend request and communicate to an external API. This will create an ingress policy for our frontend and an egress policy for the external API.

As previously mentioned, the pods will be non-isolated by default, and everything will work. Check the logs for the frontend workload to see the free advice flowing:

kubectl logs -f -n otterize-tutorial-egress-access deploy/frontend

Example log output:

The answer to all your problems is to:

The sun always shines above the clouds.

The answer to all your problems is to:

Stop procrastinating.

The answer to all your problems is to:

Don't feed Mogwais after midnight.

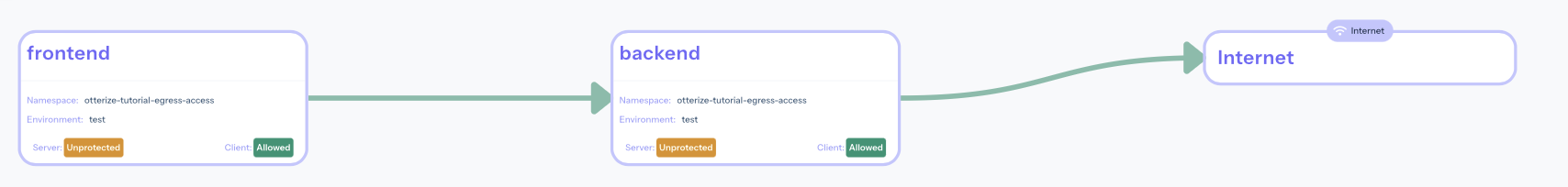

View of our non-isolated cluster within Otterize Cloud

Applying our intents

Given that this is a serious advice application, we want to lock down our pods to ensure no outside inference can occur.

To enforce the strict communication rules for our workloads, we will start by applying a default deny policy, ensuring that only explicitly defined connections are allowed. You’ll see that we are allowing UDP on port 53 to support any DNS lookups we need. Without DNS support, our pods could not resolve their cluster names (frontend, backend) to their internal IP addresses nor resolve the domain names used by our external advise API service.

kubectl apply -n otterize-tutorial-egress-access -f https://docs.otterize.com/code-examples/egress-access-control/default-deny-policy.yaml

Default Deny Policy

---

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: default-deny

spec:

podSelector: {}

policyTypes:

- Egress

- Ingress

egress:

- ports:

- protocol: UDP

port: 53

You can now see in the logs that the pods are isolated from each other and the public internet:

kubectl logs -f -n otterize-tutorial-egress-access deploy/frontend

Example log output from frontend pod:

Unable to connect to the backend

Unable to connect to the backend

Unable to connect to the backend

Now that we have secured our broader network, we will apply the following ClientIntents to enable traffic for our workloads.

kubectl apply -n otterize-tutorial-egress-access -f https://docs.otterize.com/code-examples/egress-access-control/intents.yaml

apiVersion: k8s.otterize.com/v2beta1

kind: ClientIntents

metadata:

name: frontend

namespace: otterize-tutorial-egress-access

spec:

workload:

name: frontend

kind: Deployment

targets:

- service:

name: backend

---

apiVersion: k8s.otterize.com/v2beta1

kind: ClientIntents

metadata:

name: backend

namespace: otterize-tutorial-egress-access

spec:

workload:

name: backend

kind: Deployment

targets:

- internet:

ips:

- 185.53.57.80 # IP address of our external API

# Can also be specified as a CIDR

# - 185.53.57.0/24

Now, our network and our workloads are only able to open connections to those internal and external resources that are explicitly needed. Below, we can inspect the five different NetworkPolicies generated by Otterize and look at the annotations to see how these policies match their applied pods and designated traffic rules.

- ingress-backend

- ingress-frontend

- egress-backend

- egress-frontend

- egress-public-backend

Name: access-to-backend-from-otterize-tutorial-egress-access

Namespace: otterize-tutorial-egress-access

Created on: 2024-02-25 12:20:52 -0800 PST

Labels: intents.otterize.com/network-policy=backend-otterize-tutorial-eg-00531a

Annotations: none

Spec:

# Selector specifying pods to which the policy applies. In this case, it targets pod labeled as backend.

# Otterize automatically adds these labels, ensuring they persist across deployments and multiple instances.

PodSelector: intents.otterize.com/server=backend-otterize-tutorial-eg-00531a

Allowing ingress traffic:

# Specifies that the policy allows traffic to any port on the selected pods.

To Port: any (traffic allowed to all ports)

From:

# Specifying the namespace for our pod selector

NamespaceSelector: kubernetes.io/metadata.name=otterize-tutorial-egress-access

# Further refines the allowed sources of ingress to only those pods with the Otterize managed label

PodSelector: intents.otterize.com/access-backend-otterize-tutorial-eg-00531a=true

Not affecting egress traffic

# Specifies that this policy only applies to incoming traffic to the selected pods.

Policy Types: Ingress

Name: access-to-frontend-from-otterize-tutorial-egress-access

Namespace: otterize-tutorial-egress-access

Created on: 2024-02-25 12:20:52 -0800 PST

Labels: intents.otterize.com/network-policy=frontend-otterize-tutorial-eg-2bb536

Annotations: none

Spec:

# This label identifies the NetworkPolicy as relating to the frontend pod

# Otterize automatically adds these labels, ensuring they persist across deployments and multiple instances.

PodSelector: intents.otterize.com/server=frontend-otterize-tutorial-eg-2bb536

Allowing ingress traffic:

# Specifies that the policy permits traffic to any port on the selected pods

To Port: any (traffic allowed to all ports)

From:

# Specifying the namespace for our pod selector

NamespaceSelector: kubernetes.io/metadata.name=otterize-tutorial-egress-access

# Further refines the allowed sources of ingress to only those pods with the Otterize managed label

PodSelector: intents.otterize.com/access-frontend-otterize-tutorial-eg-2bb536=true

Not affecting egress traffic

# Specifies that this policy only applies to incoming traffic to the selected pods.

Policy Types: Ingress

Name: egress-to-backend.otterize-tutorial-egress-access-from-frontend

Namespace: otterize-tutorial-egress-access

Created on: 2024-02-25 12:20:52 -0800 PST

Labels: intents.otterize.com/egress-network-policy=frontend-otterize-tutorial-eg-2bb536

intents.otterize.com/egress-network-policy-target=backend-otterize-tutorial-eg-00531a

Annotations: none

Spec:

# This selector targets the pods to which the policy applies. Here, it specifically targets pods labeled as "client" of the "frontend"

# Otterize automatically adds these labels, ensuring they persist across deployments and multiple instances.

PodSelector: intents.otterize.com/client=frontend-otterize-tutorial-eg-2bb536

Not affecting ingress traffic

Allowing egress traffic:

# Specifies that the policy allows egress traffic to any port

To Port: any (traffic allowed to all ports)

To:

# Specifying the namespace for our pod selector

NamespaceSelector: kubernetes.io/metadata.name=otterize-tutorial-egress-access

# Further refines the allowed sources of egress to only those pods with the Otterize managed label

PodSelector: intents.otterize.com/server=backend-otterize-tutorial-eg-00531a

# Specifies that this policy only applies to outbound traffic to the selected pods.

Policy Types: Egress

Name: egress-to-frontend.otterize-tutorial-egress-access-from-backend

Namespace: otterize-tutorial-egress-access

Created on: 2024-02-25 12:20:52 -0800 PST

Labels: intents.otterize.com/egress-network-policy=backend-otterize-tutorial-eg-00531a

intents.otterize.com/egress-network-policy-target=frontend-otterize-tutorial-eg-2bb536

Annotations: none

Spec:

# This selector targets the pods to which the policy applies. Here, it specifically targets pods labeled as "client" of the "backend"

# Otterize automatically adds these labels, ensuring they persist across deployments and multiple instances.

PodSelector: intents.otterize.com/client=backend-otterize-tutorial-eg-00531a

Not affecting ingress traffic

Allowing egress traffic:

# Specifies that the policy allows egress traffic to any port

To Port: any (traffic allowed to all ports)

To:

# Specifying the namespace for our pod selector

NamespaceSelector: kubernetes.io/metadata.name=otterize-tutorial-egress-access

# Further refines the allowed sources of egress to only those pods with the Otterize managed label

PodSelector: intents.otterize.com/server=frontend-otterize-tutorial-eg-2bb536

# Specifies that this policy only applies to outbound traffic to the selected pods.

Policy Types: Egress

Name: egress-to-internet-from-backend

Namespace: otterize-tutorial-egress-access

Created on: 2024-02-25 12:20:52 -0800 PST

Labels: intents.otterize.com/egress-internet-network-policy=backend-otterize-tutorial-eg-00531a

Annotations: none

Spec:

# This selector targets the pods to which the policy applies. Here, it specifically targets pods labeled as "client" of the "backend"

# Otterize automatically adds these labels, ensuring they persist across deployments and multiple instances.

PodSelector: intents.otterize.com/client=backend-otterize-tutorial-eg-00531a

Not affecting ingress traffic

Allowing egress traffic:

# Specifies that the policy allows egress traffic to any port

To Port: any (traffic allowed to all ports)

To:

# Specifies the IP address range to which the policy allows egress traffic. Here, a /32 CIDR notation indicates a single IP address, suggesting this policy targets the IP address associated with our advise API

IPBlock:

CIDR: 185.53.57.80/32

# The 'Except' field allows specifying IP addresses within the defined CIDR range that we should exclude, but it is empty in this case.

Except:

# Specifies that this policy only applies to outbound traffic to the selected pods.

Policy Types: Egress

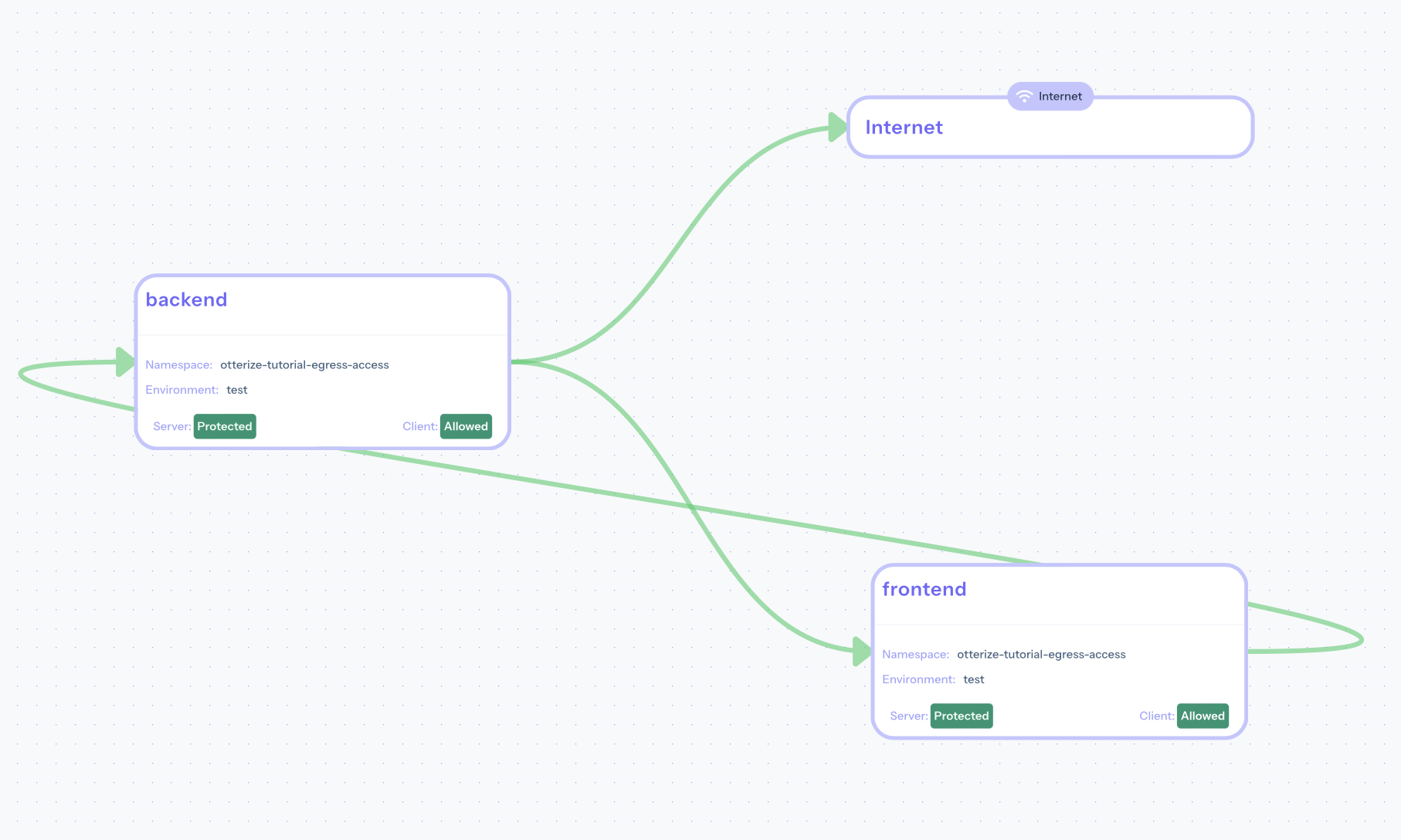

The protected network can be seen on Otterize Cloud:

Using DNS and domain names in ClientIntents

In the preceding example, a static IP address was employed for the definitions of our intents. Typically, in practical scenarios, a domain name precedes an external service rather than a static IP address. The inherent absence of a direct method to handle NetworkPolicies via domain names presents a considerable challenge in devising policy configurations for services characterized by dynamic IP addresses. However, the capability of ClientIntents to incorporate domain names, DNS records, and static IP addresses offers a flexible solution to this challenge. Below is an enhanced version of a ClientIntent that leverages a domain name.

apiVersion: k8s.otterize.com/v2beta1

kind: ClientIntents

metadata:

name: frontend

namespace: otterize-tutorial-egress-access

spec:

workload:

name: frontend

kind: Deployment

targets:

- service:

name: backend

---

apiVersion: k8s.otterize.com/v2beta1

kind: ClientIntents

metadata:

name: backend

namespace: otterize-tutorial-egress-access

spec:

workload:

name: backend

kind: Deployment

targets:

- internet:

domains:

# Domain name for our advice service

- api.adviceslip.com

In the above YAML file, we have replaced the IP address with our service’s domain name.

Otterize will now track the resolved IP addresses for api.adviceslip.com and add them to NetworkPolicies within your clusters.

Alternatively, we can use domains prefixed with a wildcard. Otterize will try to match outgoing egress requests

to wildcard domains by monitoring IPs in DNS responses. So requests to api.adviceslip.com will be

paired with *.adviceslip.com.

Since Otterize cannot resolve *.adviceslip.com's IP itself, it relies on requests from pods in the cluster by reviewing their DNS response.

Let’s deploy the revised intent with the command below:

kubectl apply -n otterize-tutorial-egress-access -f https://docs.otterize.com/code-examples/egress-access-control/domain-intents.yaml

After the updated definition takes effect, with the command below we can view the policy again to find some additions.

kubectl describe clientintents -n otterize-tutorial-egress-access backend --show-events=false

View Updated ClientIntent Description

Name: backend

Namespace: otterize-tutorial-egress-access

Labels: none

Annotations: none

API Version: k8s.otterize.com/v2beta1

Kind: ClientIntents

Metadata:

Creation Timestamp: 2024-03-08T18:53:40Z

Finalizers:

intents.otterize.com/client-intents-finalizer

Generation: 2

Resource Version: 2122

UID: c93d8cc4-2a8f-404e-b06c-1935176f1dc8

Spec:

targets:

Internet:

Domains:

api.adviceslip.com

Service:

Name: frontend

Workload:

Name: backend

Status:

Observed Generation: 2

Resolved I Ps:

Dns: api.adviceslip.com

Ips:

185.53.57.80

Up To Date: true

It is observed that Otterize identifies the IP addresses associated with the domain and incorporates them into a newly established section within the status block. This action informs the Intent Operator to dynamically adjust the network policies in response to the detection of new IP addresses originating from the specified domain name. For services that utilize dynamic IP addresses, each discovered IP address is systematically added to the network policy. Further details on DNS intents can be explored in the Reference.

Teardown

To remove the deployed examples, run the following:

kubectl delete namespace otterize-tutorial-egress-access